It was good to know from the goverment that it published lots of data collected over the period of time at https://data.gov.in/

I picked and amenities data about the villages from https://data.gov.in/catalog/village-amenities-census-2011 to do some analysis.

I believe govterment is doing sufficient analysis to find where and with what force it should use its machinery to promote its schemes.

I have been doing some analysis using the Apache Spark and eco system around it. But was interested in a quick visualization, which would help to understand the data quickly. A possible use would be using R as I wanted to build the reports quickly. I explored some of the capabilities of R and Shiny App in my earlier post of Custer Analysis of banking data.

Recently I came to know about a fantastic tool, its a web based notebook, with the in-built support for Apache-Spark, with a support of multiple langues like Scala, Python, spark sql and so on and most important that this it is opensource.

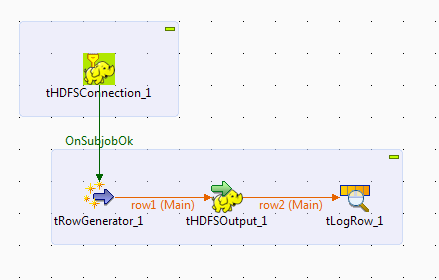

Loading the data into the dataframe/table

It is easy to accomodate spark sql also in the notebook paragraph/sections.

Following is a very simple query to show the population spread in the villages of Gulbarga district.

Goverment make policies and spend money on that, and find the effectiveness of it based on the result. We can use the collected data to understand where should be the maximum penetration of the schemes, i.e. find the villages which needs the goverment schemes most. One of the example where goverment can initiates its policies to reduce the gap of male-female ratio, we can understand from the data available, where should be the more focus.

Changed the minbenchmark to 80% and same got updated on the fly

I stated to analyse this data to check for the education facilities in the villages which is in progress, would be publishing that information in later posts.

Installation details:

a) Zeppelin was deployed on Ubuntu VirtualBox with Windows as host.

b) Set your java home (1.7) before starting Zeppelin.

c) To start execute 'zeppelin-daemon.sh start' in the ZEPPELIN_HOME\bin

I picked and amenities data about the villages from https://data.gov.in/catalog/village-amenities-census-2011 to do some analysis.

I believe govterment is doing sufficient analysis to find where and with what force it should use its machinery to promote its schemes.

I have been doing some analysis using the Apache Spark and eco system around it. But was interested in a quick visualization, which would help to understand the data quickly. A possible use would be using R as I wanted to build the reports quickly. I explored some of the capabilities of R and Shiny App in my earlier post of Custer Analysis of banking data.

Recently I came to know about a fantastic tool, its a web based notebook, with the in-built support for Apache-Spark, with a support of multiple langues like Scala, Python, spark sql and so on and most important that this it is opensource.

"Zeppelin"

I picked one of the csv from the the whole data, and which is for one of the district in Karnataka state is Gulbarga and started doing some analysis.Loading the data into the dataframe/table

It is easy to accomodate spark sql also in the notebook paragraph/sections.

Following is a very simple query to show the population spread in the villages of Gulbarga district.

Goverment make policies and spend money on that, and find the effectiveness of it based on the result. We can use the collected data to understand where should be the maximum penetration of the schemes, i.e. find the villages which needs the goverment schemes most. One of the example where goverment can initiates its policies to reduce the gap of male-female ratio, we can understand from the data available, where should be the more focus.

Changed the minbenchmark to 80% and same got updated on the fly

I stated to analyse this data to check for the education facilities in the villages which is in progress, would be publishing that information in later posts.

Installation details:

a) Zeppelin was deployed on Ubuntu VirtualBox with Windows as host.

b) Set your java home (1.7) before starting Zeppelin.

c) To start execute 'zeppelin-daemon.sh start' in the ZEPPELIN_HOME\bin